Coraz więcej firm oferuje różnego rodzaju badania użyteczności. Niektóre są niezłe, niektóre nieco gorsze. Jeśli jesteś klientem i managerem, a usability zajmujesz się tylko od czasu do czasu – zapewne czasem zadajesz sobie pytanie o czym właściwie ze specjalistami rozmawiać?

Wraz z Robertem Drozdem (Webaudit.pl) przygotowaliśmy raport/przewodnik: „Badania użyteczności – o co zapytać specjalistę”.

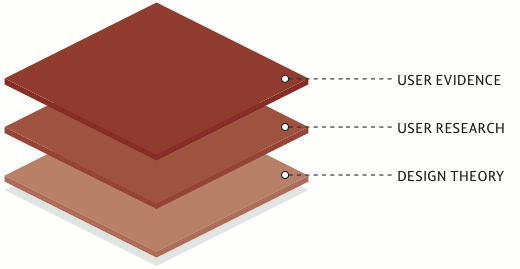

Omawiamy w nim pięć głównych tematów, które klient powinien przedyskutować ze swoim specjalistą od użyteczności:

- Jakie metody oceny użyteczności są właściwe dla Twojego projektu?

- Według jakich standardów będzie się odbywało badanie i co zostanie ocenione?

- Jacy użytkownicy wezmą udział w badaniu?

- Czy otrzymane odpowiedzi będą pomocne i dokładne?

- Jak przydatny będzie dostarczony raport?

Raport jest adaptacją wytycznych, jakie stworzyli członkowie brytyjskiego oddziału Usability Professionals’ Association. UK UPA oraz UPA zezwoliły nam na jego wykorzystanie, więc przetłumaczyliśmy dokument i zaktualizowaliśmy go, dostosowując jednocześnie do polskich warunków.

Część rekomendacji (m.in. te dotyczące ISO) złagodziliśmy. Parę innych jednak zostawiliśmy, mając świadomość, że jest na nie trochę za wcześnie. Raport ma wytyczać kierunek, a nie tylko sankcjonować to co jest.

Przed publikacją skonfrontowaliśmy raport z zaprzyjaźnionymi specjalistami oraz klientami. Feedback był jednoznaczny – istnieje potrzeba dostarczenia bardziej zaawansowanej wiedzy dla klientów (i społeczności).

Edukację początkową w dziedzinie użyteczności mamy całkiem niezłą. Konferencje, szkolenia, książki. Dlatego uważamy,że warto więc wyjść poza podstawy, tak aby klient miał świadomość również tych mniej oczywistych aspektów naszych usług.

Linki do pobrania:

- format PDF (zalecany do czytania na ekranie monitora)

- format MOBI (dla Kindle, choć PDF też da się czytać)

- format EPUB (dla innych e-czytników)

I dla porównania: rekomendacje oryginalne w języku angielskim (format PDF).

Na potrzeby raportu uruchomiliśmy odrębną stronę: usability.org.pl, ale poza nim niczego więcej na razie tam nie ma. :-)

Recent Comments